'SixthSense' is a wearable gestural interface that augments

the physical world around us with digital information and lets us use natural

hand gestures to interact with that information.

We've evolved over millions of years to sense the world around us. When we

encounter something, someone or some place, we use our five natural senses to

perceive information about it; that information helps us make decisions and

chose the right actions to take. But arguably the most useful information that

can help us make the right decision is not naturally perceivable with our five

senses, namely the data, information and knowledge that mankind has accumulated

about everything and which is increasingly all available online. Although the

miniaturization of computing devices allows us to carry computers in our

pockets, keeping us continually connected to the digital world, there is no

link between our digital devices and our interactions with the physical world.

Information is confined traditionally on paper or digitally on a screen.

SixthSense bridges this gap, bringing intangible, digital information out into

the tangible world, and allowing us to interact with this information via

natural hand gestures. ‘SixthSense’ frees information from its confines by

seamlessly integrating it with reality, and thus making the entire world your

computer.

The SixthSense prototype is comprised of a pocket projector, a mirror and a camera. The hardware components are coupled in a pendant like mobile wearable device. Both the projector and the camera are connected to the mobile computing device in the user’s pocket. The projector projects visual information enabling surfaces, walls and physical objects around us to be used as interfaces; while the camera recognizes and tracks user's hand gestures and physical objects using computer-vision based techniques. The software program processes the video stream data captured by the camera and tracks the locations of the colored markers (visual tracking fiducials) at the tip of the user’s fingers using simple computer-vision techniques. The movements and arrangements of these fiducials are interpreted into gestures that act as interaction instructions for the projected application interfaces. The maximum number of tracked fingers is only constrained by the number of unique fiducials, thus SixthSense also supports multi-touch and multi-user interaction.

The SixthSense prototype implements several applications that demonstrate the usefulness, viability and flexibility of the system. The map application lets the user navigate a map displayed on a nearby surface using hand gestures, similar to gestures supported by Multi-Touch based systems, letting the user zoom in, zoom out or pan using intuitive hand movements. The drawing application lets the user draw on any surface by tracking the fingertip movements of the user’s index finger. SixthSense also recognizes user’s freehand gestures (postures). For example, the SixthSense system implements a gestural camera that takes photos of the scene the user is looking at by detecting the ‘framing’ gesture. The user can stop by any surface or wall and flick through the photos he/she has taken. SixthSense also lets the user draw icons or symbols in the air using the movement of the index finger and recognizes those symbols as interaction instructions. For example, drawing a magnifying glass symbol takes the user to the map application or drawing an ‘@’ symbol lets the user check his mail. The SixthSense system also augments physical objects the user is interacting with by projecting more information about these objects projected on them. For example, a newspaper can show live video news or dynamic information can be provided on a regular piece of paper. The gesture of drawing a circle on the user’s wrist projects an analog watch.

The current prototype system costs approximate $350 to build.

Now What.....???

The SixthSense prototype is comprised of a pocket projector, a mirror and a camera. The hardware components are coupled in a pendant like mobile wearable device. Both the projector and the camera are connected to the mobile computing device in the user’s pocket. The projector projects visual information enabling surfaces, walls and physical objects around us to be used as interfaces; while the camera recognizes and tracks user's hand gestures and physical objects using computer-vision based techniques. The software program processes the video stream data captured by the camera and tracks the locations of the colored markers (visual tracking fiducials) at the tip of the user’s fingers using simple computer-vision techniques. The movements and arrangements of these fiducials are interpreted into gestures that act as interaction instructions for the projected application interfaces. The maximum number of tracked fingers is only constrained by the number of unique fiducials, thus SixthSense also supports multi-touch and multi-user interaction.

The SixthSense prototype implements several applications that demonstrate the usefulness, viability and flexibility of the system. The map application lets the user navigate a map displayed on a nearby surface using hand gestures, similar to gestures supported by Multi-Touch based systems, letting the user zoom in, zoom out or pan using intuitive hand movements. The drawing application lets the user draw on any surface by tracking the fingertip movements of the user’s index finger. SixthSense also recognizes user’s freehand gestures (postures). For example, the SixthSense system implements a gestural camera that takes photos of the scene the user is looking at by detecting the ‘framing’ gesture. The user can stop by any surface or wall and flick through the photos he/she has taken. SixthSense also lets the user draw icons or symbols in the air using the movement of the index finger and recognizes those symbols as interaction instructions. For example, drawing a magnifying glass symbol takes the user to the map application or drawing an ‘@’ symbol lets the user check his mail. The SixthSense system also augments physical objects the user is interacting with by projecting more information about these objects projected on them. For example, a newspaper can show live video news or dynamic information can be provided on a regular piece of paper. The gesture of drawing a circle on the user’s wrist projects an analog watch.

The current prototype system costs approximate $350 to build.

Now What.....???

There was a time a few

years ago when Pranav Mistry had become everyone’s favourite

engineer-scientist. As part of the team that unveiled The Sixth Sense

technology, Mistry mesmerized us. The coloured finger caps, the small and sleep

projector-cum-camera, and the necessary theatrics of any tech launch led us to

believe that the Sixth Sense was the future, and Mistry was the charioteer.

But then, you know, we

were like, what happened?

Mistry disappeared from

public view for a while, and we’ve all been asking ourselves – so what happened

to the guy? What happened to Sixth Sense?

Let’s start first with

the tech. SixthSense today exists as project under Pranav

Mistry’s name at his personal website. The simple looking home page just has

text in it, but don’t get put off – pictures and videos are a click away.

But most importantly –

the hardware and software assembly instructions are open source. The link is at the bottom of the page. Doesn’t seem like

they’ve updated the page much, but these guys assembled

their own, so I suppose, so can you! This author meanwhile did not see any news

of commercializing SixthSense, which he/she would be happy to be told about.

Meanwhile, Pranav

Mistry’s moved on to other things. He’s currently heading the Think Tank

Team and is the Director of Research of Samsung, where he’s busy

developing the latest in Samsung’s arsenal of cutting edge technologies.

That’s a pretty good

job, I would think.

Couple of months ago,

Mistry unveiled the new Samsung Gear ‘smartwatch’, which can call, message,

take pictures, play music and run a host of other apps right from your wrist.

By the way, Samsung overtook Apple as the

world’s largest seller of smartphones last year. I feel pretty certain

the tech has something to do with that.

If you’re interested in

the work Mistry’s been doing apart from the viral Sixth Sense project, have a

look at his website.

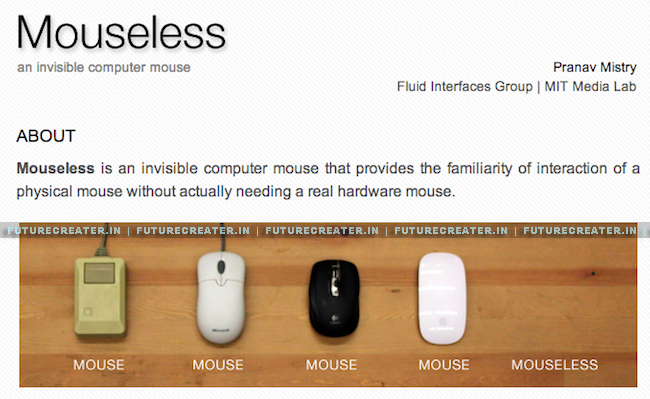

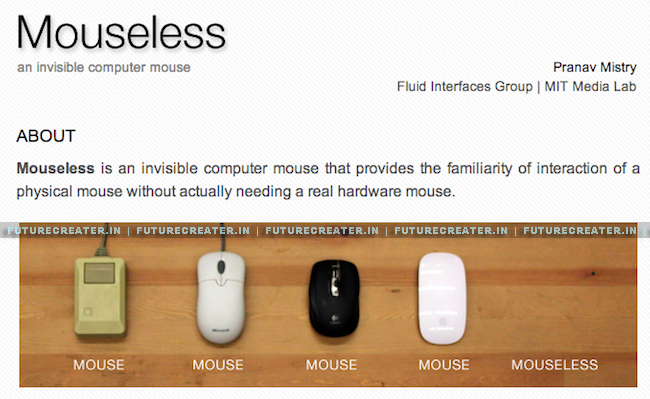

Some of his projects are incredibly cool – my personal favorite is the

Mouseless project, which basically just takes a normal mouse and makes it

disappear. People are busy designing the most ergonomic shape and size for a

mouse that can fit various hand sizes, and Mistry just goes and eliminates the

physical body.

I also enjoyed the idea

of inktuitive, which allows you to draw as if on paper, but gets your computer

to pick up what you’re drawing as digital signals. Here’s the kicker – it also

liberates you from 2D drawing, because the sensors can detect in 3D space.

To close, here’s Mr.

Mistry describing himself on his website. Read this out loud for maximum comic

effect. What a simple, wonderful guy!

I, myself am

pranav|mistry. ‘zombie’ is my nick name. My father Kirti Mistry is an architect

& a technocrat. My loving mummy Nayana cares us all. I have two sisters,

‘Sweta’ & ‘Jigna’. I have a nephew named ‘Jini’. I love my family a lot. I

have lots of friends, too. My family is big. I have 5 ‘masis’ & 3 ‘mamas’

& 5 ‘kakas’ & 2 ‘fois’ & … &… & …meet them all and my

friends in my photo gallery.

Watch Sixth sense Demonstration Video Below